The scope of local search methods goes beyond the “classical” approach of seeking the shortest path to a goal state in an observable, deterministic, and discrete environment. In local search, the path is irrelevant. It operates on the current node only (with a complete-state formulation) rather than on multiple paths simultaneously (no frontier is involved).

Local search looks for the extrema of an objective function in a non-systematic way, but it is complete, as it always finds reasonable solutions if they exist. Thus, it is adequate for optimisation problems. However, it is only guaranteed to be optimal if one single (global) extremum exists. Otherwise, it will get stuck on any of the local extrema.

Hill climbing in detail

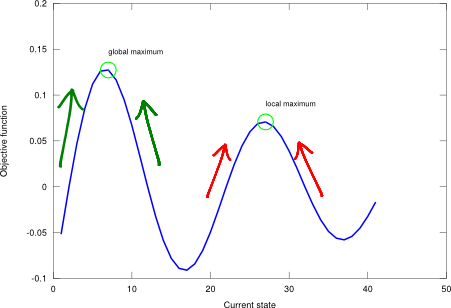

Hill climbing, aka steepest-ascent, is a greedy approach that operates in a loop that continually moves in the direction of increasing value, that is, uphill, until it reaches a “peak” (i.e., a maximum) where no neighbour shows a higher value, see Figure 1.

Figure 1. Hill climbing applied on a one-dimensional state-space landscape described by an example objective function .

Hill climbing gets stuck at local maxima, plateaus and ridges. In case of failure (i.e., not reaching the global maximum), you may restart the process with a different initial value. Hill climbing displays a unit space complexity (i.e., the current state) and a linear time complexity (with respect to the number of neighbouring states). Figure 2 implements this algorithm on a one-dimensional state-space.

Figure 2. Hill climbing algorithm.

-- Hill climbing algorithm.

--

-- PRE:

-- problem - must be an array of costs, index indicates state (table).

-- start - must be the starting state index (number).

--

-- POST:

-- solution - is the solution state (number).

function hill_climbing(problem, start)

local current = start

local neighbour

local change

while (true) do

if (current == 1) then

neighbour, change = eval_right(problem, current)

elseif (current == #problem) then

neighbour, change = eval_left(problem, current)

else

neighbour, change = eval_left(problem, current)

if (change == false) then

neighbour, change = eval_right(problem, current)

end

end

if (change) then

current = neighbour

else

return current

end

end

end

function eval_left(problem, current)

if (problem[current - 1] > problem[current]) then

return current - 1, true

else

return current, false

end

end

function eval_right(problem, current)

if (problem[current + 1] > problem[current]) then

return current + 1, true

else

return current, false

end

end

Despite the (arguably) poor performance of hill climbing to find the global maximum, it is an intuitive algorithm that provides a lot of insight into the nature of the optimisation problems and a rule of thumb to solve them. The next posts will address this lack of skill and suggest more powerful optimisation routines. Stay tuned!

2 thoughts on “Local search and the hill climbing algorithm”

Comments are closed.